Human Posture Datasets

Author: Bernard Boulay

Presentation Real data Synthetic data Publications More datasets

In the work described in [1], [2] and [3], we are interesting in recognising human static postures on image sequences. The postures are classified in four general postures and eight detailed postures:

standing postures: standing with one arm up, standing with arms near the body, T-shape

sitting postures: sitting on a chair, sitting on the floor

bending postures

lying postures: lying with spread legs, lying with curled up legs

During this work, several image sequences have been acquired to obtain a dataset of static postures: Real data. Moreover, a 3D human model has been used to acquire synthetic data for many points of view. Since our human posture recognition algorithm is based on silhouette study (a binary images which corresponds to the observed person and the background), the dataset is composed of 3D model silhouettes. Finally, a non exhaustive list of several posture datasets are given in More datasets section.

Several sequences are acquired in an office of our laboratory with a static camera. All the sequences have the same point of view and a camera calibration matrix is available:

The camera is located at

![]() in

the scene coordinate system (in cm).

in

the scene coordinate system (in cm).

The size of the obtained color images is 388x284 and their format are jpeg.

In the sequences, the person acts the different postures by rotating on her/himself to have all possible orientations. For each person, three image sequences are proposed: a first one which contains the standing postures (seq1), a second one which contains the sitting and bending postures (seq2), and a third one which contains the lying postures (seq3). The sequences are available for four different people.

Person 1: [person1.tar.gz] (size: 39.4 Mo)

seq1: 512 frames

seq2: 601 frames

seq3: 532 frames

Person 2: [person2.tar.gz] (size: 72.1 Mo)

seq1: 1072 frames

seq2: 1010 frames

seq3: 926 frames

Person 3: [person3.tar.gz] (size: 68.7 Mo)

seq1: 821 frames

seq2: 1062 frames

seq3: 817 frames

Person 4: [person4.tar.gz] (size: 65.6 Mo)

seq1: 729 frames

seq2: 779 frames

seq3: 1179 frames

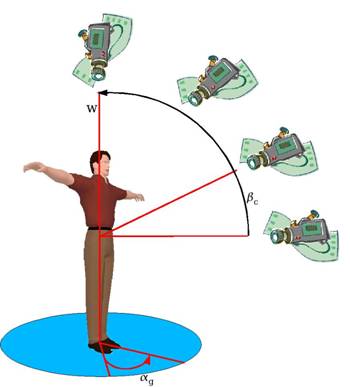

A 3D human model is used to generate binary silhouettes of the different postures of interest for several points of view according to the experimentation shows in figure [].

The proposed dataset has been acquired for a beta_c varying between -90 and 90 degrees with a step of 45 degrees and an \alpha_g angle step of 45 degrees. The information of the posture, orientation on the ground plane, and point of view are given in the name of the image file: imageBinaire_p_bbegin_bend_bstep_astep_repository.pbm. The image format is pbm.

[manSilhouettes.tar.gz] 400 frames, 100.9 ko

[womanSilhouettes.tar.gz] 400 frames, 107.0 ko

An executable is available for Linux operating systems and it generates this dataset. Moreover the parameters \alpha_g and \beta_c can be modified to obtain a specific dataset.

[1] Boulay, B. Human Posture Recognition for Behaviour Understanding. PhD thesis, Universite de Nice Sophia-Antipolis , to appear.

[2] Boulay, B. and Bremond, F. and Thonnat, M. Applying 3D Human Model in a Posture Recognition System. Pattern Recognition Letter, Special Issue on Vision for Crime Detection and Prevention, 2006, 15(27), pp 1788-1796.

[3] Boulay, B. and Bremond, F. and Thonnat, M. Posture Recognition with a 3D Human Model. Proceedings of IEE International Symposium on Imaging for Crime Detection and Prevention, 2005.

Datasets for whole human body postures are very rare (inexistent in our knowledge). It exists datasets for hand postures or head pose. The research field the most similar is the gait analysis field. But the data are limited to standing postures. Thus we gives here link and a short description of several gait datasets:

The data was collected over four days, May 20-21, 2001 and Nov 15-16, 2001 at University of South Florida (USF), Tampa. There are about 33 subjects common between the May and Nov collections. USF IRB approved informed consent was obtained from all the subjects in this dataset. The data set consists of persons walking in elliptical paths in front of the camera(s). Each person walked multiple (>= 5) circuits around an ellipse, out of which the last circuit forms the data set. There are 452 sequences from 74 subjects for May acquisition without briefcase (300GB) and 1870 sequences from 122 subjects from 122 subjects for the complete data (1.2TB). Moreover the silhouettes that were computed by their algorithm on the complete dataset are available.

240 image sequences are captured in outdoor environment with natural light. It includes three views, namely lateral view, frontal view and oblique view with respect to the image plane. The images are acquired for 20 subjects.

Automatic Gait Recognition for Human ID at a Distance

The Southampton Human ID at a distance gait database consists of two major segments - a large population (~100), but basic, database and a small population, but more detailed, database. The large database is intended to address two questions: whether gait is individual across a significant number of people in normal conditions, and to what extent research effort needs to be directed towards biometric algorithms or towards computer vision algorithms for accurate extraction of subjects. The small database is intended to investigate the robustness of biometric techniques to imagery of the same subject in various common conditions (carrying items, wearing different clothing or footwear). The size of the database is around 350 GB.

(Carnegie Mellon University)

The subjects walk on a treadmill. A total of 6 high quality (3CCD, progressive scan) synchronized cameras are used. The resulting color images have a resolution of 640x480. Each subject is recorded performing four different types of walking slow walk, fast walk, incline walk, slow walk holding a ball. Each sequence is 11 seconds long, recorded at 30 frames per second.

The video database containing six types of human actions (walking, jogging, running, boxing, hand waving and hand clapping) performed several times by 25 subjects in four different scenarios: outdoors, outdoors with scale variation, outdoors with different clothes and indoors as illustrated below. Currently the database contains 2391 sequences. All sequences were taken over homogeneous backgrounds with a static camera with 25fps frame rate. The sequences were down sampled to the spatial resolution of 160x120 pixels and have a length of four seconds in average.

Update 15 December 2006 (in progress)